November 30 - Mini Updates

We just passed Thanksgiving, and I'm going to be a bit busy until the end of the school year, so I thought I'd write some updates while I still have a bit of time during break.

I tweeted a few days ago that I'm going to do this year's advent of code in Prolog. I meant it as a joke, but it got me looking into Prolog again - I've been doing a bunch of mini exercises, and while I'm still far from competent at it, I think I will actually do this year's advent of code in Prolog. I tried writing n-queens in Prolog and found it to be much simpler than in something like Python (which makes sense - you just have to define what a satisfactory board state looks like, and Prolog just gives it to you), so I'm pretty hopeful that I'll be able to get far. If not, my fall-back language will probably be Haskell (which I'm also trying to learn).

We just sold gamut.ink, a project I worked on two summers ago. It was a really cool opportunity I got to work on a cool idea together with my brilliant friends, and I had a lot of fun. And now that we've sold it, I got the one thing that was missing from it - closure!

One last piece of news: On December 4, I'm giving a presentation on precisely the thing I talked about in the tidbit down below.

Stay tuned!

November 8 - A Primer on Measure Theory and Ergodic Theory, leading up to Birkhoff's Ergodic Theorem

As you may know, I've been doing a DRP on Ergodic Theory for the last month and a half. I recently made a twitter post about how I thought Birkhoff's Ergodic Theorem was exceptionally cool, and I got some good feedback from my followers, so I decided I'll write an entire tidbit on some (very) basic Ergodic Theory. My goal here is to write a few paragraphs about basic ideas in Ergodic Theory, focusing on the theorems rather than the proofs. I'm assuming a very cursory knowledge of measure theory - the following will set the level of my explanation: a "measure" of a set is its "area", a set is "measurable" if it has area (most nice sets are measurable), a function is "measurable" if it only sends measurable sets to measurable sets, and a function is "measure-preserving" if the preimage of a measurable set has the same measure.

The first result we get from the above definitions is Poincaré's Recurrence Theorem. Stated simply, suppose you have some measure-preserving transformation of a probability space (i.e. the whole space has area 1), and you take some subset with positive measure - imagine a disk or a ball or any set with "area". Then if you keep applying , then almost all4 the points will return back to in finite time. An important caveat is that they don't always return back to at the same time; two points that are right next to each other could return to at completely different times. But they will return!5 This theorem blew my mind when I first came across it. You start off with only the restriction that your map has to preserve measure, and you get a result that pretty much every point in your set will loop back to the set.

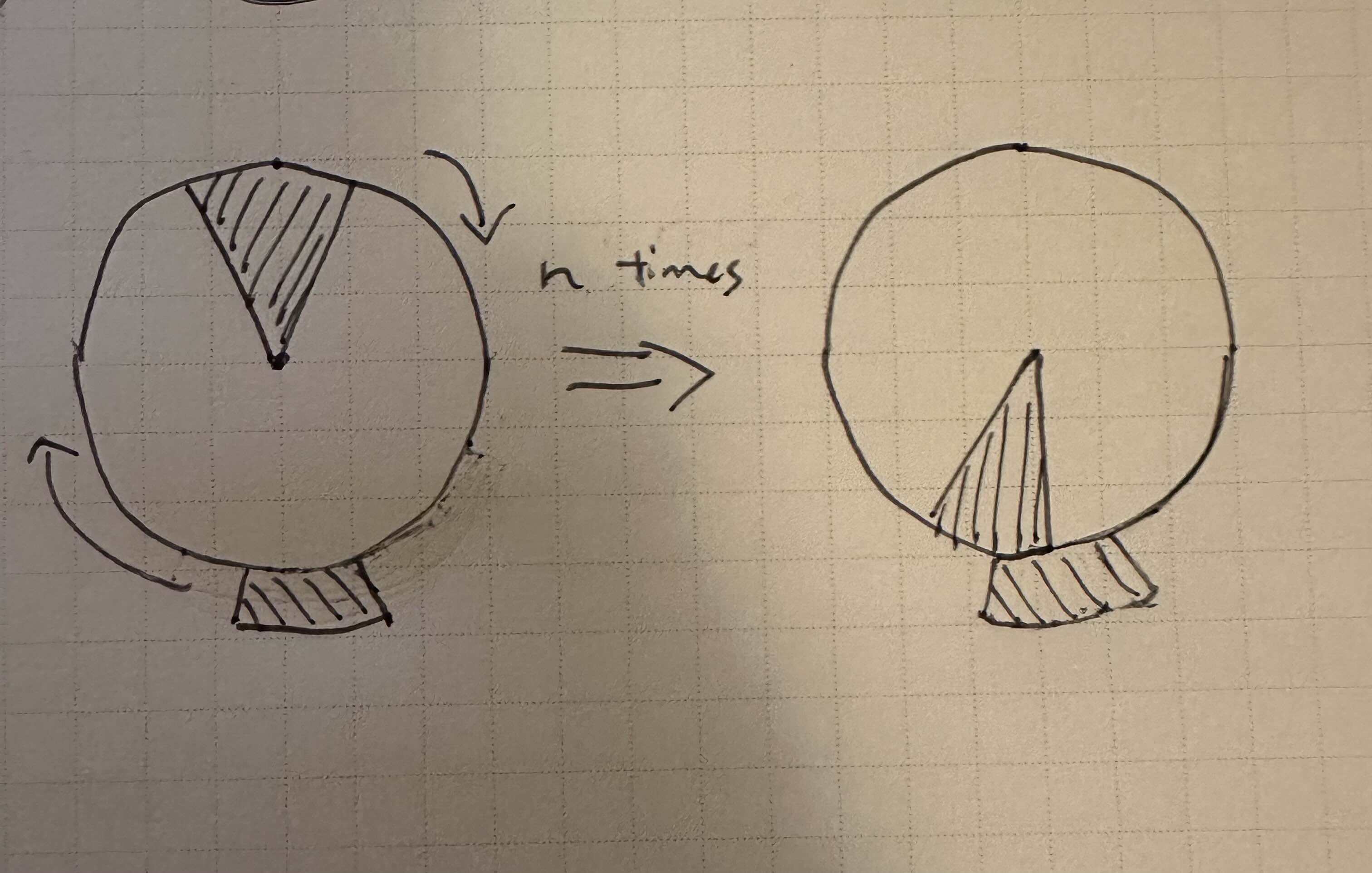

Now is a good time to define ergodic maps. A map is ergodic if the only measurable sets that satisfy have measure 0 or 1. This is the definition given in the textbook I'm using, but a nicer, equivalent definition is that is ergodic if for every pair of measurable sets with nonzero measure, the set has positive measure for some . In layman's terms, any measurable set will have a not-insignificant chunk of it sent to any other measurable set . You can think of this as a really loose kind of mixing - imagine you have a wheel and you want to rotate it by some specific angle, but you can only rotate the wheel by increments of some particular irrational angle. Of course, you won't be able to perfectly rotate your wheel to your desired angle, but you can get pretty damn close. Similarly, if you color a region of the wheel a certain color, and you color a region around the wheel another color, then after some number of rotations you're guaranteed to have those two regions overlap. Another way of thinking about it is that if you take , , , etc, and you took their union, you'd get a set of measure 1. So almost every point ends up in .

Finally, we get to talk about Birkhoff's Ergodic Theorem. Suppose we have a measurable function , and a measure-preserving map . Then converges almost everywhere to some measurable function with . More importantly, if is ergodic, then we can imagine as sending to all sorts of places around ; so must be a constant almost everywhere, and we get . If you know of the Monte Carlo method, this might look familiar. With Monte Carlo, we take random samples from our sample space, find the value of at each of them, and then average them. In our case, we're using instead of sampling. Although isn't random, it mixes the points of up enough for it to act like it's random - at least for the purposes of taking an average. So what Birkhoff says is that the "time average" (i.e. the average of the terms) is equal to the "space average" (our integral , which we're taking across ).

Lastly, I'll leave you with an application. There's a cool number theory theorem that we can prove using the ergodic theorem: Borel's Theorem on Normal Numbers states that almost all numbers in , when written in binary form, have the same number of 's as they do 's. Here's a quick sketch of the proof. Let be defined by . This map is measure-preserving and ergodic. If we let be the set of points that have a unique binary expansion6, then this set has a countable complement, so the measure of is 1. We can write any number with a unique binary expansion as for ; then . Let if and otherwise. Then , so is the average number of 's in the first binary digits of . But we know almost everywhere, and , so the frequency of in the binary expansion of almost every number is . :)

I think this illustrates the usefulness of Ergodic Theory. Hope you learned something, or had fun reading. I'm still learning and I might not be the best at explaining things, so let me know if I made any mistakes or if I should change up the wording or if you have any questions. (Shoot, that reminds me I need a comment box or some better way to reach me. If you don't know me personally, sending me an email is probably your best bet.) Thanks for reading this!

November 1 - Updates, plan for site, some sample math

It's crazy how fast a month can go by. It's getting much more chillier in Madison (I would be so much more enthusiastic about it if my radiator worked). I wanted to write something to start off the month.

One exciting update about the site: I added footnote support to Kobo, and by extension, this site you're reading right now.1 My vision is that these footnotes would be helpful when writing math notes. I feel like one of the main themes of math textbook discourse is bevity v.s. verbosity. A lot of people complain about textbooks saying something along the lines of "xyz follows simply from abc" when xyz doesn't follow simply from abc. This is especially the case for textbooks that are concise, like Lang's Algebra. On the other hand, if you spell out every single part of your proof you end up with really dense proofs that span multiple pages and are a pain in the ass to work through (as is the case with some of the proofs in Dummit & Foote). My proposed solution is to write the nice prose like you would see in Lang, with lots of footnotes within the prose pointing to the verbose mathematical derivation and symbolic manipulation.2

My other updates are a bit less exciting. I went to Boston to meet up with some friends and see a concert. I started reading Matsumura's Commutative Ring Theory, and I'm also planning on picking up Milnor. We've reached mixing in my Ergodic Theory DRP. My delete key on my macbook is gummy and is messing with my ability to write up pset solutions and tidbits. I started learning haskell, and I'm working through the Project Euler problems using haskell as an exercise3.

One last update: I'm going to give a talk on Category Theory sometime later this month. It's going to be an introduction to Category Theory, specifically using Ologs as a way of introducing important Category Theory concepts (like composition, functors, limits, presheafs, etc). I'll upload the slides (and maybe a recording!) once that's done. That's it for now I guess.

Footnotes

-

Test testing test ↩

-

Test to see that katex properly renders in footnotes: ↩

-

Here's the repo to my solutions. I'm still ass at haskell and FP in general, so any feedback is welcome! ↩

-

"Almost all" is an actual technical term. Specifically, is almost all points of if and have the same measure. Take for example the two intervals and . Then , but they both have the same length of 1. That's because the points and on the ends of have 0 length (because they're points), so hacking them off doesn't change the length of our interval. ↩

-

At least, almost all of them will. ↩

-

Some numbers don't have a unique binary expansion. For instance, . In fact, all of these numbers are finite sequences that end with a 1, so they're countable. ↩